What can slow down execution of SAP PI Default Delete Job?

Is your delete job deleting enough? is it slow? Let’s have a look at what are the factors which can slow down delete job in PI.

Deleting data from SAP PI tables using Default DELETE Job has been very simple and easy back from early days, just activate the job provided by SAP, only thing we need is to decide is what time we want to run & retention periods of your messages, if this is done then we are all set, no more worries on database table growth, this is specifically true when message volume handled by PI system is fairly small, without doing any changes if we just let DELETION JOB run, we are good.

Trouble starts when you are handling 6-8 million or more Asynchronous messages a day

I want to highlight what can slow down execution of delete job, before that let’s see how it works by answering below questions.

- What happens in background when you start Execute Now on delete job?

- Which table’s data are deleted when it says deleted 390 records in below example?

- What can slow down execution of the delete job?

- How many delete statements are executed by DELETE job for tables under scanner? How database treats these statements?

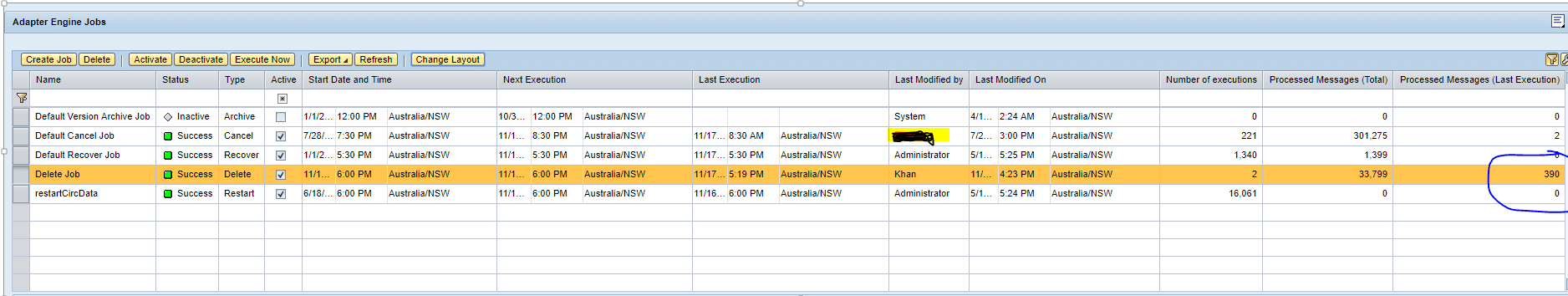

Example, I have below result of delete job where it says it has deleted 390 records.

Question 1: What happens in background when you start Execute Now on delete job?

SAP has divided tables which delete job looks into mainly two category.

- Control Tables: Other which is used to control the message flow, like checking duplicate message processing (Ex: Message ID duplicate checking, JMS duplicate checking), checking order of EOIO message sequence.

- Data Tables: One that holds business data & details of execution of business data (Interface execution & logs).

Control Tables:

- BC_MSG_DUP_CHECK

- BC_MSG_SEQ_STAT

- BC_MSG_SEQ_NBR

- BC_MSG_BLIST

- XI_AF_SVC_ID_MAP (Even though this table is not part of Default Delete job, its in this list purely from control table point of view, this table is not part of Messaging system duplicate but mainly used by Adapter framework hence deletion in this table is handled by separate job).

Data Tables:

- BC_MSG BC_MSG

- BC_MSG_VERSION

- BC_MSG_AUDIT

- BC_MSG_LOG

- BC_MSG_LOG_STAT

In the current implementation of DELETE Job (PI 7.5 SP7) when you run the delete job, it creates 2 SAP managed threads which runs in parallel, DELETE job finishes when these two parallel threads are in final state (Either success or failure).

- JVM Thread 1: Tries to delete expired records from Control tables based on retention periods.

- JVM Thread 2: Tries to delete expired records from Data tables based on retention periods.

if JOB status is not coming back after few minutes or few hours that means job is still running, status will not appear till its success or failed so if number of records to be deleted are more it will take long time for status to appear.

In above example, our delete job said it deleted 390 records, what does it mean, which table it deleted?

390 is total Asynchronous and Synchronous messages it deleted from BC_MSG & BC_MSG_LOG, did it delete only from these two tables, answer is NO, it did deleted from control tables as well, but details are not reported yet by current version of DELETE Job.

So which all tables it deleted? Check the logs below, as you can see in below logs, it did run deletion in other tables as well.

This should also answer second question we had at top Question 2: Which table’s data are deleted when it says deleted 390 records in below example?

Question 3: What can slow down speed of DELETE Job?

Most important question, what can slow it down or how to make it fast, these things comes into picture when PI is processing huge volumes.

Answers revolves around the fact that we have to make sure below threads finishes sooner and deletes more, remember both threads should finish for one instance of DELETE job to complete and report results.

- JVM Thread 1: Deletes expired records from Control tables based on retention periods.

- JVM Thread 2: Deletes expired records from Data tables based on retention periods.

Factors which effects greatly are:

- Mismatch in configuration of retention periods for control tables and data tables for ex: Control table retention period is 30 days where as for data tables its 3 days.

- Huge backlogs in above tables, back logs are mainly because DELETE job was not running for few days. (Very little chances this can happens but this can happen at least in lower environments).

Messaging System Property which matter for DELETE Job:

- “messaging.duplicateCheck.persistTime”: controls the expiry of records in BC_MSG_DUP_CHECK tables (Default 30 days)

- “messaging.log.retainTime”: controls expiry of records in BC_MSG and BC_MSG_LOG (Default 7 days)

- “messaging.orphanAuditLog.retainTime”: controls orphan entries in BC_MSG_AUDIT table (Default 30 days)

In our Production system, current values are

1 day for messaging.duplicateCheck.persistTime & messaging.orphanAuditLog.retainTime

3 days for messaging.log.retainTime

If you want to check how DELETE job is performing in your case by increasing the trace severity of the following location to Info: com.sap.engine.messaging.impl.core.job.executor.DeleteExecutor

This could be done from SAP NetWeaver Administrator – Troubleshooting->Logs and Traces->Log Configuration -> choose Show: Tracing Locations and enter the Location as given above. Then choose Severity ‘Info’ from the corresponding dropdown and Save Configuration button. You can then track the job execution from Log Viewer again in Troubleshooting->Logs and Traces->Log Viewer -> select View->Open View->Developer Traces, then enter the above location in the filter row under Location column.

Question 4: How many delete statements it runs on above tables? How database treats these statements?

When DELETE JOB is executed, current algorithm used is, it will prepare 1 delete statements for each table with smaller sizes to delete in few interval, for ex for BC_MSG_DUP_CHECK table, field PERSISTED_AT field will have smaller values to run when statements are running.

Based on messaging.dbUpdates.batchSize & messaging.bulkCommit.threshold it will keep firing smaller sets of statements.

This is heart of the problem:

No matter how much you fine tune, there are few things not in our control, lets see below example.

Suppose you have 100 million messages in BC_MSG_DUP_CHECK table for simple reason you didn’t change messaging.duplicateCheck.persistTime whose default value is 30 days & daily intake is close to 3 to 4 million.

You will find below traces when delete jobs are running.

If above statement tries to delete 20 days older messages, even though the field PERSISTED_AT is indexed, statement will do full index scan as shown below picture.

So if it goes for full index scan, it might run for 8 hrs for deleting anything older than 3 days that means to delete 97 million messages and there is nothing you can do about this.

Note(Please ignore the fact i have used (sysdate-3) as value for timestamp field).

If you have DELETE job not running faster, that means there are hell lot of records to delete and might be its doing FULL INDEX Scan rather than INDEX RANGE scan.

Summary:

BC_MSG_DUP_CHECK is the killer in high volume systems where default value is not changed from 30 days to match with retention period, SAP has confirmed they have customer up to 10 million Async messages per day and delete job has been running fine by few changes as suggested in SAP Note 2142864. It’s important to change of messaging.dbUpdates.batchSize and messaging.bulkCommit.threshold default values so that each execution it can delete larger chunk, we have seen significant increase in number of records it deletes by correcting retention periods and increasing batchSize, bulk commit & parallelWorkCount.

In our case: BC_MSG_DUP_CHECK had 102 million entries because of this the thread1 was running for long time, our delete job never gave status for more than 2 weeks so finally we are preparing to manually deleted records from table based on field PERSISTED_AT. Data tables we have size less than 15 million so we are hoping once entries from BC_MSG_DUP_CHECK are deleted and implementing note 2142864 we should be able to catch up backlogs in data tables.

I tried to highlight factors in Application server and database which can cause slowness, Application server is where we can fine tune to by changing right values based on volume we handle. Database side i don’t think we have any room. at the max you can reorg table to rebuild indexes but that will not have considerable impact.

DELETE JOBS Sample thread execution:

New NetWeaver Information at SAP.com

Very Helpfull